An Incomplete Look at Vulnerability Databases & Scoring Methodologies

A look at some of the fundamental vulnerability databases and scoring methodologies currently in use in the industry

In this article I take an incomplete look at the current landscape of Vulnerability Databases & Scoring Methodologies. I say incomplete because there is much more than can, and is written on the topic, but this is a look at some of the fundamental databases and scoring methodologies currently in use in the industry as well as some that are beginning to emerge as the digital landscape evolves.

One critical aspect of the conversation around application security and vulnerability management is the method by which vulnerabilities are categorized and scored. This is an important aspect of the push for softwareF transparency and more importantly, software security. Without understanding what software vulnerabilities are present and the way those vulnerabilities are scored, it is difficult for organizations to prioritize vulnerabilities for remediation. Software producers can prioritize vulnerabilities for remediation to reduce risk to their customers and inform their customers about the severity and exploitability of vulnerabilities in their products. Software consumers can understand the inherent risk of the software they’re using and make risk informed decisions around its consumption and use. So, let’s look at some of the common terms associated with software vulnerabilities.

Common Vulnerabilities and Enumerations (CVE)

CVE stands for Common Vulnerabilities and Enumerations and is oriented around a program with the goal of identifying, defining and cataloging publicly disclosed cybersecurity vulnerabilities. CVE as an organization involved participation from researchers and organizations around the world who serve as partners to help discover and publish vulnerabilities, including descriptions of the vulnerabilities in a standardized format.

Origins of the CVE program and concept can be traced back to a MITRE whitepaper in January 1999 titled “Towards a Common Enumeration of Vulnerabilities” By David Mann and Steven Christey. The whitepaper laid out the cybersecurity landscape at the time which included several disparate vulnerability databases with unique formats, criteria and taxonomies. The authors explained how this impacted interoperability and the sharing of vulnerability information. Among the roadblocks to interoperability the authors cited inconsistent naming conventions, managing similar information from diverse sources and complexity of mapping between databases.

To facilitate interoperability between security tooling, the CVE was proposed as a standardized list to hep enumerate all known vulnerabilities, assign standard unique names to vulnerabilities and be open and shareable without restrictions.

This effort has grown to now be an industry staple for over 20 years without over 200 organizations from over 35 countries participating and over 100,000 vulnerabilities being represented by the CVE system. Participating organizations are referred to CVE Numbering Authorities (CNA)’s. CNA’s consist of software vendors, open-source software projects, bug bounty service providers and researchers. They’re authorized by the CVE program to assign unique CVE ID’s to vulnerabilities and publish CVE records to the overarching CVE list. Organizations can request to become a CNA but must meet specific requirements such as having a public vulnerability disclosure policy, have a public source for vulnerability disclosures and agreeing to the CVE Terms of Use.

We previously discussed NVD and while there is close collaboration between NVD and CVE, they are distinct efforts. CVE originated by MITRE and is a collaborative community-driven effort. NVD on the other hand was launched by NIST. That said, both programs are sponsored by the U.S. Department of Homeland Security and the Cybersecurity Infrastructure Security Agency (CISA) and available for free public use. While CVE manifests as a set of records for vulnerabilities including identification numbers, descriptions and public references, NVD is a database that synchronizes with the CVE list so that there is parity between disclosed CVE’s and the NVD’s records. NVD builds on the information included in NVD’s to provide additional information such as remediations, severity scores and impact ratings. NVD also makes the CVE’s searchable by advanced metadata and fields.

CVE also consists of several Working Groups with distinct focus areas. These include automation, strategic planning, coordination and quality. These working groups strive to improve the overall CVE program and improve its value and quality for the community. In addition to working groups, CVE also has a CVE Program Board to ensure the CVE program meets the needs of the gobal cybersecurity and technology community. This board oversees the program, steers its strategic direction and evangelizes the program in the community.

Some fundamental terms to understand as it relates to the CVE Program include the Vulnerabilities, CVE Identifier, Scope, CVE List, CVE Identifiers, and Scope CVE Record. CVE defines vulnerabilities as flaws in software, firmware, hardware or services that result from weaknesses that can be exploited and have a negative impact on the CIA triad of impacted components. CVE Identifiers or CVE ID’s are unique alphanumeric identifiers assigned by the CVE program to specific vulnerabilities that enable automation and common discussion of the vulnerabilities by multiple parties. Scopes are the sets of hardware, software or services for which organizations within the CVE program have distinct responsibility for. The CVE list is the overarching catalog of CVE Records that are identified by or reported to the CVE Program. A CVE record provides descriptive data about a vulnerability associated with a CVE ID and is provided by CNA’s as previously discussed. CVE Records can be Reserved, Published or Rejected. Reserved is the initial state when reserved by a CNA, published is when the CNA populated the data to become a CVE record and lastly, Rejected is when a CVE ID and Record should no longer be used, however they remain on the CVE List for future reference of invalid CVE Records.

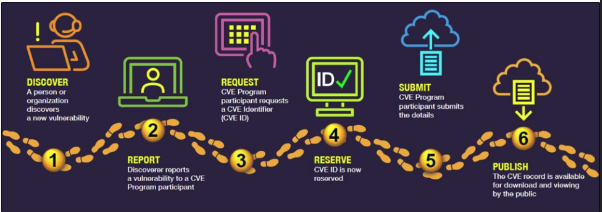

CVE Records undergo a defined lifecycle as well. This lifecycle includes the stages of Discover, Report, Request, Reserve, Submit and Publish. Initially an individual organization discovers a new vulnerability, reports it to the CVE program or participant, requests a CVE ID which gets reserved while the CVE Program Participant submits the associated details. Finally, that CVE Record is published for download and viewing by the public to begin appropriate response activities, if warranted. Source: CVE Record Lifecycle

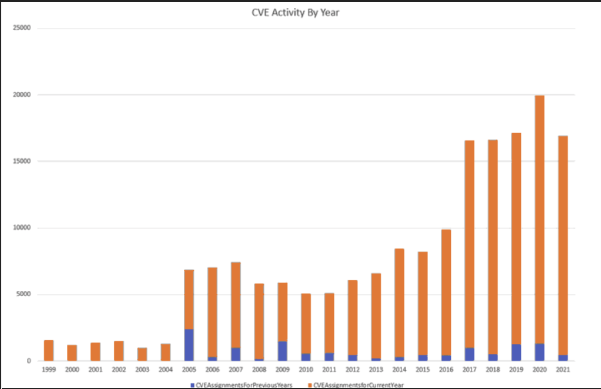

The CVE Program and associated CVE List of CVE Records has grown linearly since the program’s inception. Looking at the published CVE Record Metrics, annual CVE record by quarter have grown from the hundreds to over 6,000 per quarter at the time of this writing in 2022. This is likely due to the growing popularity of the program, increase in security researchers, uptick in involvement by software vendors and the overarching growth and visibility of cybersecurity.

National Vulnerability Database (NVD)

Vulnerabilities help inform activities to drive down risk, both for software producers and consumers and provide broad industry knowledge of the vulnerabilities present in the ecosystem. While there are several vulnerability databases in the industry, one of the most notable examples is NIST’s National Vulnerability Database (NVD). NVD is “a comprehensive cybersecurity vulnerability database that integrates all publicly available U.S. Government vulnerability resources and provides references to industry resources”.

NVD was formed in 2005 and reports on Common Vulnerabilities and Exposures, which we will discuss shortly. Origins of the NVD trace all the way back to 1999, with NVD’s predecessor with the Internet Category of Attack toolkit (ICAT), which originally was an access database of attack scripts. The ICAT name can be traced to one of the largest defense contractors, Booz Allen Hamilton (BAH). ICAT originally involved students from the SANS institute, who worked as analysts involved with the project. However, ICAT faced some funding challenges but was kept alive through efforts by SANS as well as employees of NIST, going on to reach over 10,000 vulnerabilities before receiving some additional funding from the Department of Homeland Security (DHS) to create a vulnerability database that is rebranded as NVD as it is known today. As the project evolved, NVD went on to adopt popular vulnerability data and scoring that is still in use today, such as the Common Vulnerability Scoring System (CVSS) and Common Product Enumeration (CPE).

As of 2021, NVD contains over 150,000 vulnerabilities which continue to grow as new vulnerabilities emerge. NVD is utilized by professionals around the world, interested in vulnerability data, as well vendors and tools looking to correlate vulnerability findings and their associated details.

NVD facilitates this process by analyzing CVE’s that have been published in the CVE dictionary. By referencing the CVE dictionary and performing additional analysis, the NVD staff produce important metadata about vulnerabilities, including CVSS scores, CWE types, and associated applicability statements in the form of CPE’s. It’s worth noting that the NVD staff doesn’t perform the vulnerability testing and utilizes insights and information from vendors and third-party security researchers to aid in the creation of the attributes discussed above. As new information emerges NVD then often revises the metadata such as CVSS scores and CWE information.

NVD integrates information from the CVE program, which is essentially a dictionary of vulnerabilities that we will discuss elsewhere. NVD assesses newly published CVE’s after they are integrated into the NVD with a rigorous analysis process. This includes reviewing reference material for the CVE, including publicly available information on the Internet. CVE’s get assigned a CWE identifier to help categorize the vulnerability and the vulnerability is also assigned exploitability and impact metrics through the CVSS. Applicability statements are given through CPE’s to ensure that specific versions of software, hardware or systems are identified through these applicability statements. This helps organizations take the appropriate action depending on if the vulnerability impacts the specific hardware and software they are using. Once this initial analysis and assessment is performed, any assigned metadata such as CWE’s, CVSS and CPE’s are reviewed as a quality assurance method by a senior analyst before it is ultimately published on the NVD website and associated data feeds.

NVD offers a rich set of data feeds and API’s for organizations and individuals to consume published vulnerability data. API’s allow interested parties to programmatically consume the vulnerability information in a much more automated and scalable manner than manually reviewing the data feeds. The API’s NVD offers also include other benefits such as being frequently updated, searchable, data matching and more and are often used by security product vendors to provide vulnerability data as part of their product offering.

Sonatype OSS Index

As OSS adoption has continued to grow, and increasingly contributing to significant portions of modern applications and products, there has been more demand to understand the risk associated with OSS components. The Sontatype OSS Index has emerged as one reputable source to aid with this. The OSS Index provides a free catalog of millions of OSS components as well as scanning tools, which can be used by organizations to identify vulnerabilities with OSS components, the associated risk and ultimately drive down risk to organizations. The OSS Index also provides remediation recommendations and much like NVD, has a robust API for scanning and querying as part of integrations.

In addition to being able to search for OSS components and find any information related to their vulnerabilities the OSS Index supports scanning projects for OSS vulnerabilities and integration with modern CI/CD toolchains through the indexes REST API. As part of the broader push to “shift security left”, moving security earlier in the SDLC, this toolchain and build time integration facilitate identifying vulnerabilities in OSS components prior to deploying code to a production runtime environment. The OSS Index API integration is used by many popular scanning tools for various programming languages, such as the Java Maven plugin, Rust Cargo Pants, OWASP Dependency-Check and Python’s ossaudit among several others. It is worth noting while the OSS Index is free for community use, it also doesn’t include any specially curated intelligence or insight from experts. Sonatype however does offer other service offerings to provide additional intelligence, manage libraries and provide automation capabilities.

Open-Source Vulnerabilities (OSV) Database

In 2021, Google Security launched the OSV Project with the aim of “improving vulnerability triage for developers and consumers of open-source software”. The project evolved out of earlier efforts by Google Security dubbed “Know, Prevent, Fix” and OSV strives to provide data on where vulnerabilities are introduced and where they get fixed so software consumers can understand how they are impacted and how to mitigate any risk. OSV includes goals to minimize the required work by maintainers to publish vulnerabilities and improve the accuracy of vulnerability queries for downstream consumers. OSV automates triage workflows for open-source package consumers through a robust API and querying vulnerability data. OSV also improves the overhead associated with software maintainers trying to provide accurate lists of affected versions across commits and branches that may impact downstream consumers.

The Google Security team has published blogs demonstrating how OSV connects SBOM’s to vulnerabilities due to OSV’s ability to describe vulnerabilities in a format specifically designed for mapping to open-source package versions and commit hashes. It also aggregates information from a variety of sources such as the GitHub Advisory Database (GHSA) and Global Security Database (GSD). There is even an OSS tool dubbed “spdx-to-osv” That creates an OSS vulnerability JSON file from information listed in an SPDX document.

OSV isn’t just a database available at https://osv.dev but it also a schema and Open-Source Vulnerability format. OSV points out that there are many vulnerability databases but no standardized interchange format. If organizations are looking to have widespread vulnerability database coverage, they must wrestle with the reality that each database has its own unique format and schema. The same goes for databases looking to exchange information with one another. This hinders adoption of multiple databases for software consumers and stymies publishing to multiple databases for software producers. OSV aims to provide a format that all vulnerability databases can rally around and allow broader interoperability among vulnerability databases as well as ease the burden for software consumers, producers and security researchers looking to utilize multiple vulnerability databases. OSV operates in a JSON-based encoding format with various Field Details such as ID, published, withdrawn, related, summary and severity among several others, including sub-fields.

Global Security Database (GSD)

Despite the wide adoption of the CVE Program, there are many who have pointed out issues with the program, approach and its inability to keep pace with the dynamic technological landscape. With the emergence of cloud computing groups such as the Cloud Security Alliance (CSA) have grown as reputable industry leaders for guidance and research in the modern era of cloud-driven IT. Among notable efforts of CSA is the creation of the Global Security Database (GSD) which paints itself as a modern approach to a modern problem, claiming CVE is an antiquated approach that hasn’t kept pace with the modern complicated IT landscape. The effort has been spearheaded by Josh Bressers and Kurt Seifried, both of whom have extensive experience and expertise in vulnerability identification and governance both at Red Hat and in Kurt’s case even serving on the CVE board.

In their CSA blog post “Why We Created the Global Security Database” Kurt lays out various reasons for the creation of the GSD and why other programs, such as CVE have fallen short. The article traces back the origins of vulnerability identification, pre-dating CVE to the program known as Bugtraq ID’s, which required interested parties to subscribe and go read available vulnerability information. The author describes the growth of the CVE program which initially was only a thousand or so findings per year, peaking in 8,000 CVE’s before beginning to decline in 2011. The article also demonstrates the growth of CVE Numbering Authorities (CNA)’s as discussed in our section on the CVE Program. However, despite the growth of CNA’s it is pointed out how nearly 25% of CNA’s have been inactive for at least a year. There also was a peak of CVE’s being published and assigned peaked in 2017, aside from a spike in 2020, which declined the subsequent year.

Earlier efforts Josh and Kurt were involved with, such as the Distributed Weakness Filing project are described, and it is demonstrated how even the Linux Kernel got involved with the effort, which is a start contrast of its refusing to work with the CVE Project, which is discussed at length by Greg Kroah-Hartman in the talk titled “Kernel Recipes 2019 — CVEs are dead, long live the CVE!”. In the talk, Greg points out that the CVE Program doesn’t work well with the Linux Kernel due to the rate of fixes being applied and backported to users through a myriad of methods. Greg explains how the average “request to fix” timeline for Linux CVE’s is 100 days, indicating a lack of concerns for Linux Kernel CVE’s and at a broad level how the Linux project moves too fast for the rigidly governed CVE process.

The GSD project announcement describes how services have eaten the world and modern software and applications are overwhelmingly delivered as-a-Service, which is hard to argue given the proliferation of cloud computing in its various models, but most notably for applications, Software-as-a-Service (SaaS). The authors explain how the CVE Program lacks a coherent approach when it comes to covering as-a-Service applications.

Among other changes cited by the authors and GSD project leads include the rampant growth of packages such as Python which is 200,000 or NPM which is 1.3 million. There is also a perception that the CVE Program lacks transparency, community access and engagement and a lack of data as it relates to vendors utilizing OSS packages, using Log4j as an example.

For those interested in getting involved in the open and community-driven GSD effort you can find out more on the GSD Home Page or communication channels such as Slack or the Mailing-list.

Common Vulnerability Scoring System (CVSS)

While the CVE Program provides a way to identify and record vulnerabilities and the NVD enriches CVE’s and presents them in a robust database, the CVSS assesses the severity of security vulnerabilities and assigns severity scores. CVSS strives to capture technical characteristics of software, hardware and firmware vulnerabilities. CVSS offers an industry standardized way of scoring the severity of vulnerabilities that is both platform and vendor agnostic and is entirely transparent with regards to the characteristics and methodology that a score is derived from.

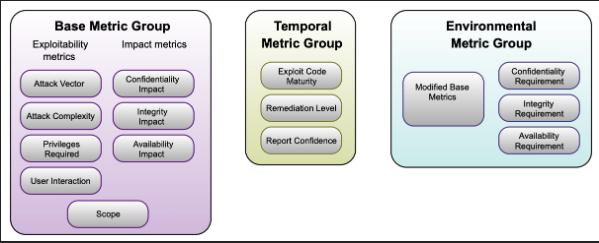

The CVSS originated out of research by the National Infrastructure Advisory Council (NIAC) in the early 2000’s, with CVSS Version 1 originating in early 2005. While NIAC’s research led to the creation of CVSS, in 2005, NIAC selected the Forum of Incident Response and Security Teams (FIRST) to lead and manage the CVSS initiative into the future. FIRST is a US-based non-profit organization that aids computer security incident response teams with resources, such as CVSS. While CVSS is owned and operated by FIRST, other organizations can use or implement CVSS, if they adhere to the FIRST CVSS specification guidance. CVSS has went through several iterations with the original CVSS in early 2005, CVSS v2 in 2007, and CVSS v3 in 2015. CVSS at the time of this writing is on the v3.1 specification. CVSS consists of three core metrics groups.

As mentioned, CVSS consists of three metrics groups, Base, Temporal and Environmental. Best is the severity of a vulnerability based on intrinsic characteristics that remain constant are make assumptions of worst-case scenarios regardless of the deployed environment. Temporal on the other hand adjust the base score, accounting for factors that change over time, including the actual availability of an exploit. Lastly, environmental metrics adjust both the Base and Temporal severity scores based on specifics of the computing environment, including the presence of mitigations to reduce risk. Generally, the base score is assigned by the organization that owns the product or third parties, such as security researchers. These are the scores/metrics that get published, as they remain constant upon assignment. Since the publishing party won’t have the full context of the hosting or operating environment as well as potential mitigating controls in place, it is up to CVSS consumers to adjust scores via Temporal and Environmental scores, if desired. Mature organizations that do make informed adjustments based on these factors are better able to prioritize vulnerability management activities based on more accurate risk calculations that account for their specific environment and controls involved.

Diving a little deeper into each metric group, the Base metric group as mentioned involves intrinsic characteristics over time that are immutable regardless of the environment exists in. It involves both Exploitability Metrics and Impact. Exploitability Metrics include things such as the attack vector or complexity as well as the privileges required. These metrics revolve around a vulnerable component and the ease by which it can be exploited. On the flip side of Exploitability is the Impact metrics, using the traditional cybersecurity triad of Confidentiality, Integrity and Availability. This is the consequences of the vulnerability being exploited and the impact it would have on the vulnerable component.

Base Metrics

Base metrics make assumptions such as the knowledge of the attacker as it relates to the system as well as its defense mechanisms. As discussed, above, the Base Metric includes Exploitability and Impact specific metrics as well. Within the Exploitability metrics you have things such as Attack Vector and Complexity, Privileges Required, User Interaction and Scope.

Attack Vector seeks to provide context about the methods of exploitation that are possible by a malicious actor. Factors such as the physical proximity required for exploitation factor into the scoring for this metric, due to the reality that it is less likely the number of malicious actors with physical proximity to a vulnerable component will of course be lower than those with logical access except in rare circumstances. Attack Vector includes metric values such as Network, Adjacent, Local or Physical. From a network perspective this could be bound to a local network, or remote exploitability, potentially to the entire Internet. Adjacent on the other hand would be bound to a specific physical or logical scope, such as sharing a Bluetooth network/range or being on the same local subnet. Local would involve factors such as accessing the systems keyboard or console but also could include remote SSH access. Local also may require user interaction such as through phishing attacks and social engineering. Lastly, the Physical metric value would mean the malicious actor needs physical access to the vulnerable component to take advantage of the vulnerability.

While Attack Vector was based on the available paths and methods an attacker has available to them, Attack Complexity are conditions beyond the malicious actor’s control. These could include examples such as specific configurations being present on the vulnerable component. Attack Complexity is categorized as either Low or High. Low would be a situation that allows the malicious actor repeatable success of exploiting the vulnerable component where High would be more nuanced and require the malicious actor to gather knowledge, prepare a target environment or inject themselves in the network path such as with a Man-in-the-Middle (MitM) style attack.

Privileges Required describes the level of privileges an attacker must possess to successfully exploit a vulnerability. Situations where no privileges are required would generate the highest Base score metric where the score would be lower for example if exploitation required the malicious actor to have administrative levels of access. Privileges Required ranges from None, Low and High. None would be not requiring any access to systems or components, low would-be basic user capabilities and high would-be administrative levels of access to a vulnerable component to exploit it.

User Interaction describes the level of involvement that a human user, other than the malicious actor must conduct to allow for a successful compromise. Some vulnerabilities can be successfully executed without any involvement from anyone other than the malicious actor, where others require involvement from additional users. This metric is straightforward, with either None or Required being options.

The last Exploitability Metric is Scope, which describes the potential for the exploitation of a vulnerable component to extend beyond its own security scope and impact other resources and components. This metric required an understanding of the extent to which a vulnerable component can impact other resources under its jurisdiction, and more fundamentally, what components are under its jurisdiction to begin with. Two metric value options exist, which are Unchanged or Changed, representing the extent to which exploitation could impact components managed by another security authority.

Within Base Metrics there are also the Impact Metrics, represented by the traditional CIA triad. CVSS recommends analysts keep their impact projections reasonable aligns with realistic impacts a malicious actor could have. For those familiar with NIST Risk Management Framework guidance around CIA, you will notice a similarity. This means the CIA metric values are None, Low or High. None being no impact to CIA, Low being some loss of CIA, and High being total loss of CIA and the most devastating potential impact possible in the given scenario related to exploitation of the vulnerability.

Temporal Metrics

As mentioned previously, Temporal metrics can change over time due to factors such as an active exploit driving a score up, or an available patch that could drive a CVSS score down. Keep in mind, these factors are related to the industry, not specific to the user’s environment or specific mitigations an organization may have put in place. Temporal Metrics include Exploit Code Maturity, Remediation Level, and Report Confidence.

Exploit Code Maturity is based on the current state of the relevant exploit techniques and the availability of code to exploit the vulnerability. This is often referred to as “in-the-wild” exploitation and a notable example if CISA’s “Known Exploited Vulnerabilities Catalog”, which is updated regularly with vulnerabilities that are known to be actively exploited and require Federal agencies to patch known vulnerability exploits. This list already includes hundreds of vulnerabilities and includes a wide variety of both software and hardware exploits that may apply to many organizations. This list is updated regularly as new vulnerabilities that are known to be actively exploited are discovered and confirmed. Exploitability Code Maturity metrics include Unproven, Proof-of-Concept, Functional, High and Not Defined. These metrics represent everything from an exploit code not being available or theoretical all the way to being fully functional and autonomous, requiring no manual trigger.

Remediation Level helps drive vulnerability prioritization efforts and involves possibilities such as workarounds through official fixes being available. The potential metric values include Unavailable, Workaround, Temporary Fix and Official Fix. There may be possibility of fixing the vulnerability or unofficial workarounds may be required until an official fix is published, verified and vetted for consumption and implementation.

The last Temporal Metric is Report Confidence deals with the actual confidence in the reporting of the vulnerability and its associated technical details. Initial reports for example may be from unverified sources but may mature to be validated by vendors or reputable security researchers. Potential metric values include Unknown, Reasonable and Confirmed, representing the increasing confidence in the vulnerability and details being reports. These factors can influence the scoring of the vulnerability overall.

Environmental Metrics

For organizational specific context, this is where the Environmental metric comes into play. This will look different for every software consumer, since every environment is unique and has a range of factors that could impact the Environmental score, such as their technology stack, architecture and specific mitigating controls which may be present.

Environmental metrics facilitate organizations adjusting base scores due to factors specific to their operating environment. The modifications revolve around the CIA triad and allows analysts to assign values aligned with the role each CIA aspect plays on the organizations business functions or mission. Modified Base Metrics are the result of overriding Base metrics due to the specifics of the user’s environment. It is worth noting that these modifications required a detailed understanding of base the Base and Temporal metric factors to make an accurate modification that doesn’t downplay the risk a vulnerability presents to an organization.

CVSS Rating Scale

CVSS utilizes a qualitative severity rating scale, ranging from 0.0 to 10.0.

All the various CVSS scoring metrics and methods produce what is known as a Vector String, which the CVSS specification defines as a “specifically formatted text string that contains each value assigned to each metric and should always be displayed with the vulnerability score”. Vector strings are composed of Metric Groups, Metric Names, Possible Values and whether they are mandatory. This culminates in a vector string that would be presented as “Attack Vector: Network, Attack Complexity: Low, Privileges Required: High, User Interaction: None, Scope: Unchanged, Confidentiality: Low, Integrity: Low, Availability: None”.

Calculating the metrics required a detailed understanding of the possible fields, values and other factors and it is strongly recommended to visit the CVSS v3.1 Specification for further learn about the Equations and guidance.

Critiques

Despite its widespread use and adoption, CVSS isn’t without its critics either. Security Research and author Walter Haydock in his “Deploying Securely” blog takes aim at CVSS in his article “CVSS: an (inappropriate) industry standard for assessing risk”. In the article, Walter makes the case that the CVSS isn’t appropriate for assessing cybersecurity risk. Walter cites several articles from industry leaders who demonstrate why CVSS alone shouldn’t be used for risk assessment, despite it generally being used by the industry for exactly that purpose. As it is pointed out by Walter’s article as well as others, CVSS is often used to help organizations prioritize vulnerabilities for mitigation or remediation, based on assessing the ease of exploitation and level of impact if it occurs. Research by organizations such as Tenable points out that over 50% of vulnerabilities scored as High or Critical by CVSS regardless of if they are ever likely to be exploited. It is demonstrated that 75% of all vulnerabilities rated as High or Critical never actually have an exploit published and associated with them, but security teams prioritize these vulnerabilities, nonetheless. This means organizations are wasting limited time and resources prioritizing vulnerabilities that are unlikely, if ever, to be exploited, rather than addressing vulnerabilities with active exploits. Organizations are potentially missing large amount of risk due to not addressing vulnerabilities rated between 4–6 with active exploits, due to taking the blanket approach of chasing High and Critical scored vulnerabilities per CVSS. This is understandable to some extent, as organizations are often dealing with hundreds or thousands of assets, that they even know of, each of which often have many vulnerabilities associated with them. It is difficult to track down and perform granular vulnerability management efforts when you’re drowning in a sea of vulnerability data, let alone even get a clear picture of the assets that belong to your organization and pose a risk.

Conclusion

As mentioned in the introduction, there is much more that can, is, and will (by me) written on this topic. Vulnerability Databases, Scoring Methodologies and more broadly Vulnerability Management is a complex topic that is only growing in complexity with the rapid growth and integration of technologies. The technological landscape has changed tremendously over the last two decades and as technologies such as Cloud, Microservices, API’s and more continue to grow both adoption and complexity, it will require the cybersecurity industry to re-evaluate our approach to Vulnerability Management to keep pace with the changing threat landscape. Luckily, there are people far smarter than myself who are tackling this problem and working in a transparent, collaborative community-driven manner to do so. Collectively we can create a more secure digitally driven future and society.

If you have any questions, or would like to discuss this topic in more detail, feel free to contact us and we would be happy to schedule some time to chat about how Aquia can help you and your organization.